VL-BERT-Graph: Graph-enhanced Transformers for Referring Expressions Comprehension

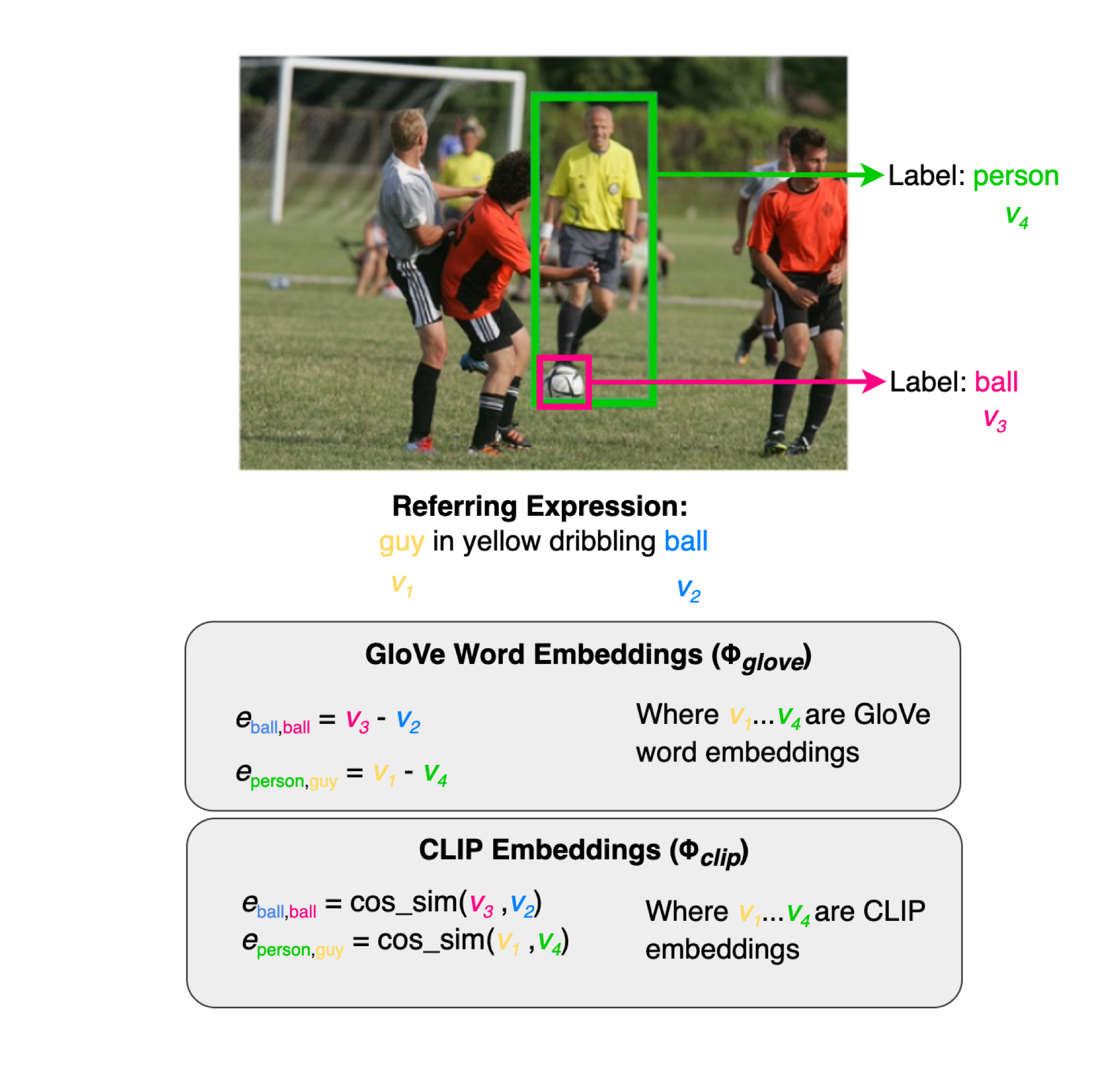

We explore a simple method to incorporate inter-token relationships in a Transformer before performing any training, using graphs with edge features. In VL-BERT-Graph, we generate a fully-connected graph of input tokens where the edges represent similarity between the tokens, obtained using GloVE and CLIP. We then use a message-passing GNN to incorporate these features into the input tokens or the output encoding of the model, and train the Transformer with edge-feature attention masks.

Report