VLC-BERT: Visual Question Answering with Contextualized Commonsense Knowledge

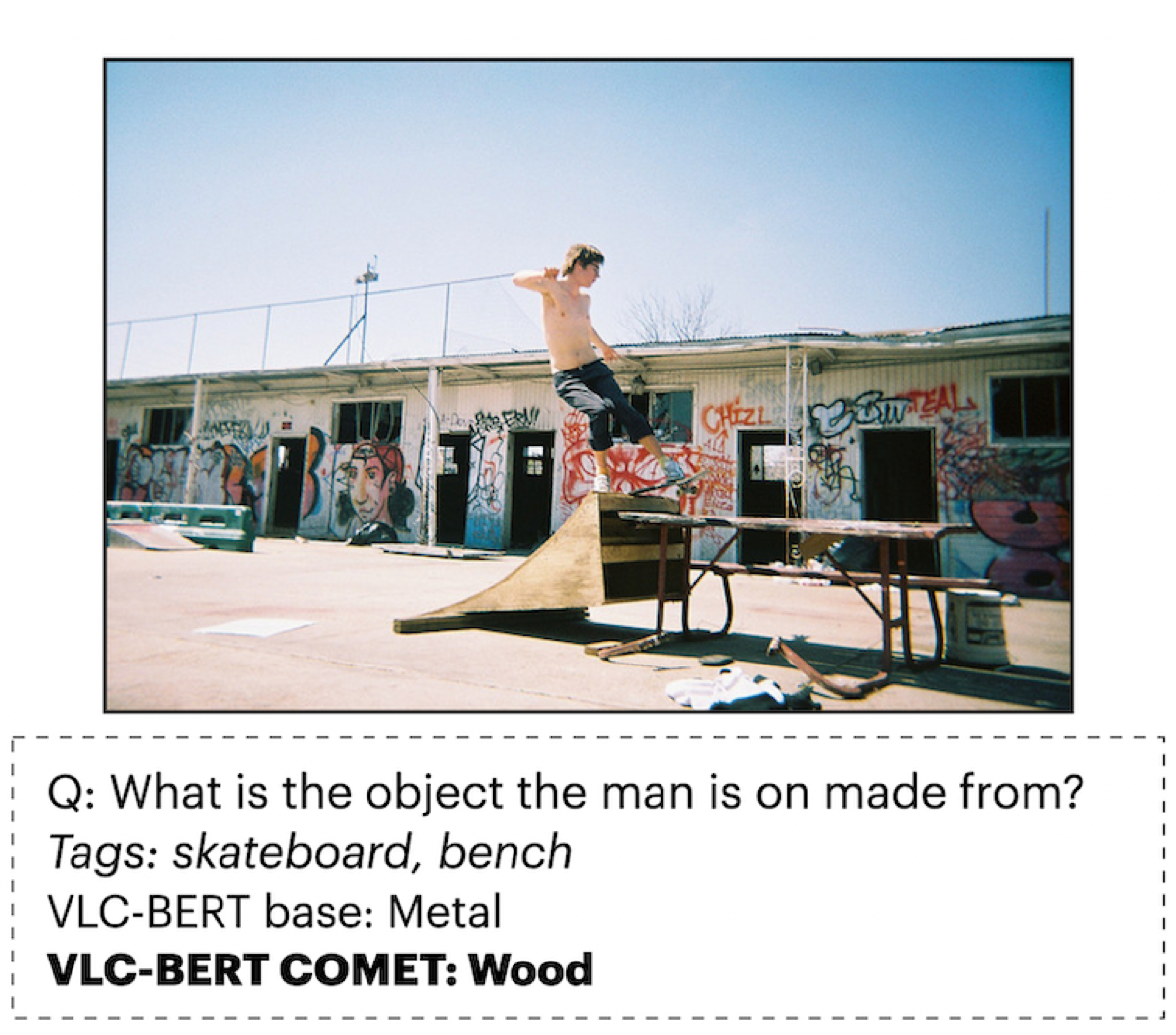

We present a new Vision-Language-Commonsense transformer model, VLC-BERT, that incorporates contextualized knowledge using Commonsense Transformer (COMET) to solve Visual Question Answering (VQA) tasks that require commonsense reasoning. VLC-BERT outperforms existing models that utilize static knowledge bases, and the article provides a detailed analysis of which questions benefit from the contextualized commonsense knowledge from COMET.

Paper | Github | ArXiv